Reducing our Carbon Docker image size further!

Second post of the series, we keep diving into more ways to reduce our Docker image size. Let's see how we manage to reach a factor 10!

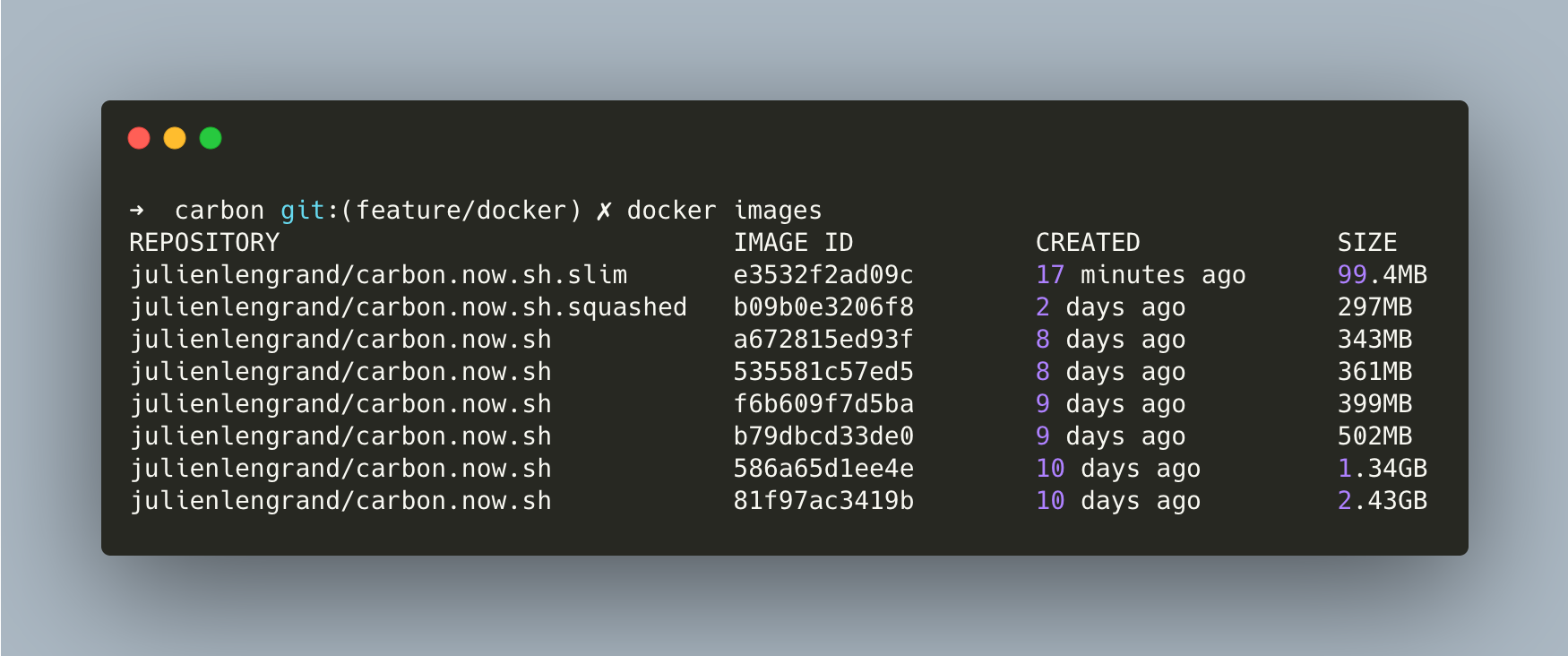

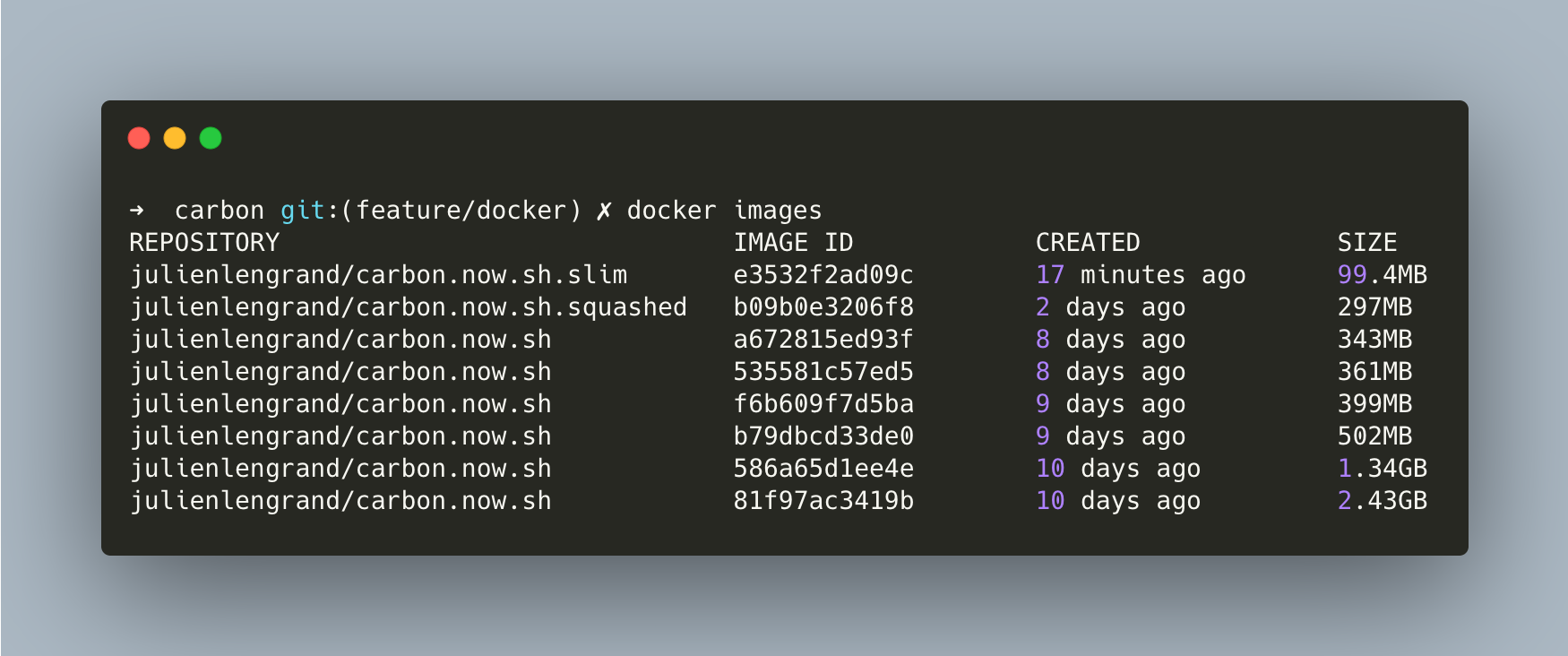

tl;dr : Starting from a (nodejs) Docker image of 2.43Gb, we use a step by step approach to get under 100MB for a deployment ready image!

This article is a direct follow-up to my last article : Reducing Docker's image size while creating an offline version of Carbon.now.sh.

I was still unsatisfied with the final results of 400Mb for our Carbon Docker image and kept diving a little further. Let's see what additional there are in our sleeves to do just that.

Removing all unnecessary files from the node_modules

During our last experiment, we got rid of all development dependencies before creating our final Docker image. Turns out, even those leftover modules contain clutter such as documentation, test files, or definition files. node-prune can help us solve that problem. We can fetch it during compilation and run it after having removed our development dependencies.

Now, it can be considered bad practice to fetch files from the big bad internet to create a Docker file for multiple reasons (security, and reproducibility mainly) but given that we use the file in our builder container I'll accept that limitation for now.

Our Dockerfile becomes :

FROM mhart/alpine-node:12 AS builder

RUN apk update && apk add curl bash

WORKDIR /app

COPY package*.json ./

RUN yarn install

COPY . .

RUN yarn build

RUN npm prune --production

RUN curl -sfL https://install.goreleaser.com/github.com/tj/node-prune.sh | bash -s -- -b /usr/local/bin

RUN /usr/local/bin/node-prune

FROM mhart/alpine-node:12

WORKDIR /app

COPY --from=builder /app .

EXPOSE 3000

CMD [ "yarn", "start" ]

There are three main changes :

- We fetch the node-prune script during building

- We run it at the end of the build process

- Because curl and bash are no available by default on alpine, we have to install them!

The resulting image is 361Mb, so we still shaved 30Mb off our container size. Good news.

➜ carbon git:(feature/docker) docker images

REPOSITORY IMAGE ID SIZE

julienlengrand/carbon.now.sh 535581c57ed5 361MB

Diving into our image

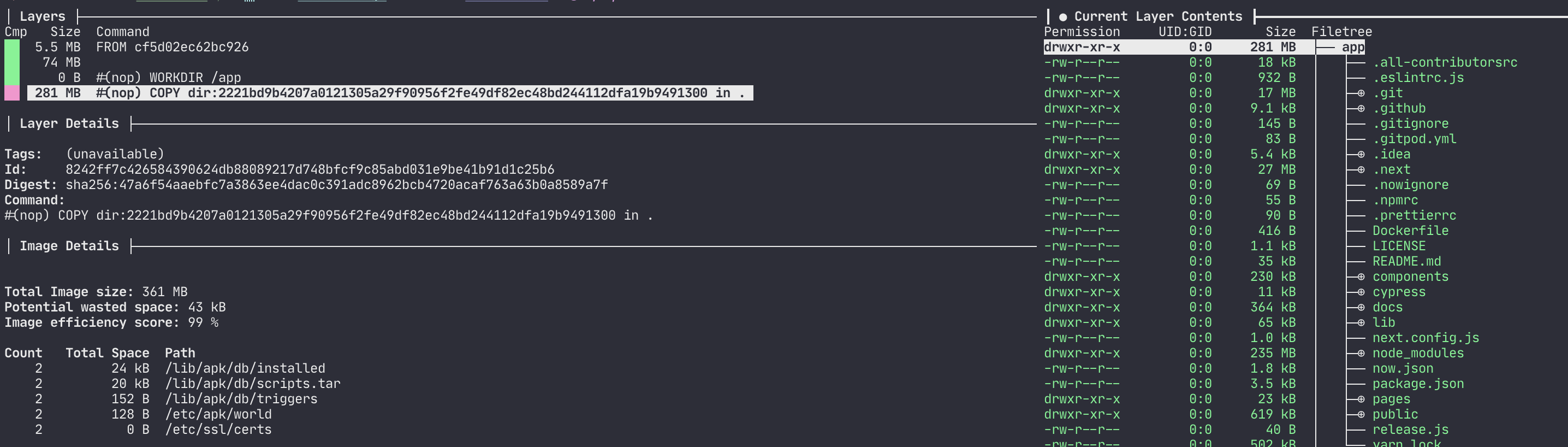

We see that the wins we are getting are getting marginally lower. So we'll have to check deeper into what strategic improvements we can do next. Let's look at our image, and more specifically what is taking up space. For this, we'll use the awesome tool dive.

Alright, this view gives us some interesting information:

- The OS layer is 80Mb. Not sure how much we can do about this

- We still have 281(!)Mb of stuff needed to run the app

- But we also see lots of useless things in there! .git and .idea folders, docs, ...

- No matter what we do, there is still 235Mb (!!!) of node_module left to be dealt with

So in short, we can save another 30ish MB removing some auxiliary folders, but the bulk of the work would have to be done in the node_modules.

We'll modify the Dockerfile to just copy the files required to run the app (it's probably possible to do a bulk copy, I haven't found an answer I liked just yet.

FROM mhart/alpine-node:12 AS builder

RUN apk update && apk add curl bash

WORKDIR /app

COPY package*.json ./

RUN yarn install

COPY . .

RUN yarn build

RUN npm prune --production

RUN curl -sfL https://install.goreleaser.com/github.com/tj/node-prune.sh | bash -s -- -b /usr/local/bin

RUN /usr/local/bin/node-prune

FROM mhart/alpine-node:12

WORKDIR /app

COPY --from=builder /app/.next ./.next

COPY --from=builder /app/components ./components

COPY --from=builder /app/lib ./lib

COPY --from=builder /app/node_modules ./node_modules

COPY --from=builder /app/pages ./pages

COPY --from=builder /app/public ./public

COPY --from=builder /app/next.config.js ./next.config.js

COPY --from=builder /app/LICENSE ./LICENSE

COPY --from=builder /app/package.json ./package.json

EXPOSE 3000

CMD [ "yarn", "start" ]

We save some more space, as expected

➜ carbon git:(feature/docker) docker images

REPOSITORY IMAGE ID SIZE

julienlengrand/carbon.now.sh a672815ed93f 343MB

Checking production node modules

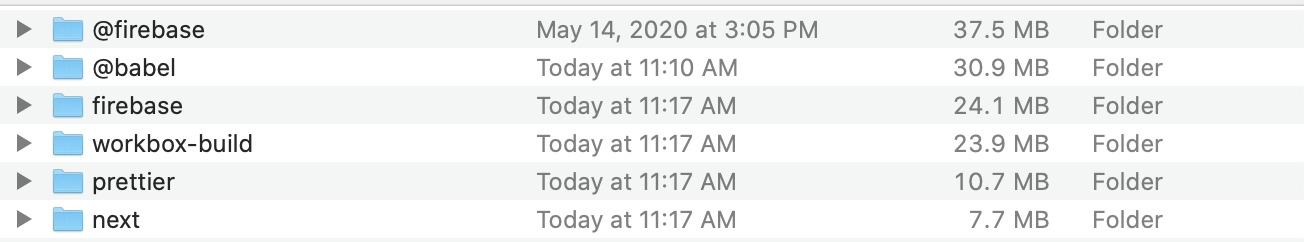

The next thing I've done was to look at the leftover node_modules dependencies that make it to the production build. Here are the top 5 biggest dependencies, sorted by size

Some quick observations:

- Firebase is responsible for a whooping 60Mb in our image

- Next is large, but required to run the app.

- All of the others, especially prettier, seem like they should be dev dependencies

We'll have to investigate this further.

- The application uses Firebase. Looking at the documentation, you can indeed import only what you need, but the library will download everything anyways so there is not much we can do there.

- It looks like prettier is actually used in production, so we can't do anything about that.

- The application is a Next.js app, so it sounds logical that it needs

next.

We don't see any mention of the other dependencies in the package.json file. Let's use $ npm ls on the production dependencies to see where they're coming from.

carbon@4.6.1 /Users/jlengrand/IdeaProjects/carbon

├─┬ ...

├─┬ next@9.4.1

│ ├─┬ ...

│ ├─┬ @babel/core@7.7.7

├─┬ ...

├─┬ next-offline@5.0.2

│ ├─┬ ...

│ └─┬ workbox-webpack-plugin@5.1.3

│ ├── ....

│ └─┬ workbox-build@5.1.3

│ ├─┬ @babel/core@7.9.6

So it seems like babel and workbox are also coming from the next framework. We may have reached a dead end.

Back to Docker : Docker squash

We've looked into the application itself and decided we couldn't get clear wins any more. Let's move back to Docker. Can we pass the 300MB barrier with some extra steps?

When building an image, it is possible to tell Docker to squash all the layers together. Mind that it is a one-way operation, you won't be able to go back. Also, it might be counter-productive in case you run a lot of containers with the same base image. But that allows us to save some extra space. The only thing we have to do is to add the -squash option to our Docker build command.

In our case, I deem this acceptable because we don't run any other node apps in our cluster and this is a one time experiment.

Here is the result:

$ docker build --squash -t julienlengrand/carbon.now.sh.squashed .

➜ carbon git:(feature/docker) ✗ docker images

REPOSITORY IMAGE ID SIZE

julienlengrand/carbon.now.sh.squashed b09b0e3206f8 297MB

julienlengrand/carbon.now.sh a672815ed93f 343MB

Well that's it we made it! We are under 300MB! But I'm sure we can do even better.

Back to Docker : Docker slim

There are many tools I had never learnt about before starting this fun quest. A few of them have been suggested to me by friends on Linkedin. One of those is Docker-slim. Docker-slim claims to optimize and secure your containers, without you having anything to do about it. Have a look at the project, some of the results are quite surprising indeed.

To work with docker-slim, you first have to install the tool on your system and then ask it to run against your latest Docker image. Of course there are many more options available to you. Docker-slim will run your container, analyze it and come out with a slimmed down version of it.

When I ran it the first time, I got extremely good results, but docker-slim deleted the whole app from the container XD. I opened an issue about it.

Manually adding the app path to the configuration fixes the issues, but also I guess prevents most of the optimizations.

Running docker-slim leads the following results :

$ docker-slim build --include-path=/app julienlengrand/carbon.now.sh.squashed:latest

➜ carbon git:(feature/docker) ✗ docker images

REPOSITORY IMAGE ID SIZE

julienlengrand/carbon.now.sh.squashed.slim 8c0d8ac87f74 273MB

julienlengrand/carbon.now.sh.squashed a672815ed93f 297MB

Not amazing, but hey we're still shaving another 20MB with a pretty strong limitation on our end so it's still quite something.

EDIT: Docker-slim : Part 2!

The author of docker-slim helped me on the open issue, and it appears that I only had to include the .next folder of the app for it to work as expected. This gives us MUCH better results, and we can get under 100MB! It also looks like we can get rid of the squashing step, since it reduces the performance of docker-slim! Killing 2 birds with one stone, how nice :).

Our command line becomes :

$ docker-slim build --include-path=/app/.next julienlengrand/carbon.now.sh.squashed:latest

➜ carbon git:(feature/docker) ✗ docker images

REPOSITORY IMAGE ID SIZE

julienlengrand/carbon.now.sh.squashed.slim f3d2078d3650 104MB # less performant

julienlengrand/carbon.now.sh.slim e3532f2ad09c 99.4MB

julienlengrand/carbon.now.sh a672815ed93f 343MB

Other ideas I looked into:

- Next.js has a packaging tool called pkg that allows for creating executables and get rid of the whole node ecosystem in the process. It looked interesting but requires the application to run on a custom server, which carbon does not. Given that I wanted to keep the node application as-is and simply create a layer on top of it, that rules out this solution

- Similarly, I looked into GraalVM, and specifically GraalJS. Use a Polyglot GraalVM setup should produce optimized, small executables. I even got quite some starting help on Twitter for it. I easily managed to run carbon on the GraalVM npm, but my attempts to create a native image of the project have been a failure so far. I probably should look at it again in the future.

Conclusion

We started our first post with a 'dumb' Dockerfile and a 2.53Gb image. With some common sense, we were able to quickly tune it down to less than 400MB. But diving even further, we see that we can even go beyond that and reach just under 100MB. I find that interesting because on my local machine, that's about half the size of the node_modules for the project!

I learnt a few things :

- As we write code and build new applications every day, it is important to keep size and performance in mind. It is impressive to see how quick it was to reduce the size of the final deliverable by more than a factor 10! How many containers today could still be optimized?

- Some tools and languages seem less container friendly than others. It is likely that a Go or Rust software would have a much lower footprint. We have seen how heavy our node_modules folder was here. It makes sense for the Carbon project to have gone the serverless route.

- More and more technologies seem to offer 'native' compilation, and should help reduce the memory cost of running applications. I named only 2 here (GraalVM and pkg but there are more). We hear about them a lot lately, but I wonder how generalized their adoption is in the wild today. It can only improve.

That's it! I hope you enjoyed the ride, and see you another time!